Overview#

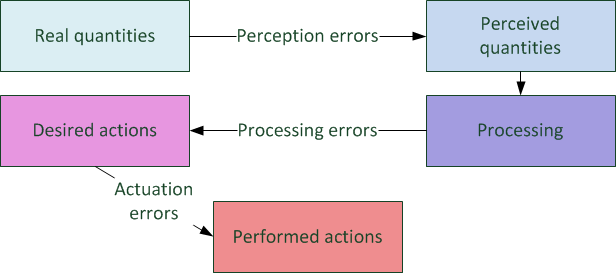

The driver state provides a generic mechanism to induce imperfection into car-following and lane change models. Although errors may enter the driving process at many stages [see Figure 1], SUMO only applies errors at the perception stage, see below for details.

Figure 1: Errors in the driving process.

Practically, errors are added to the input quantities of the

car-following model's input parameters of spacing and speed difference. For an integration in contributed car-following

models, the

implementation in the standard

model

can be adopted (see MSCFModel_Krauss::stopSpeed() and

MSCFModel_Krauss::followSpeed()). Currently (SUMO 1.8.0)

it is only implemented for the standard Krauss and IDM.

Equipping a Vehicle with a Driver State#

To apply the imperfect driving functionality for a vehicle it is

equipped with a Driver State Device, see the description of

equipment

procedures

(and use =driverstate).

An example definition that equips one vehicle with a Driver State is show below. At the default parameters, the driverstate models perfect awareness / no errors. To observe effects from this device, the initialAwarenss must be set to a value below 1.

<routes>

...

<vehicle id="v0" route="route0" depart="0">

<param key="has.driverstate.device" value="true"/>

<param key="device.driverstate.initialAwareness" value="0.5"/>

</vehicle>

....

</routes>

The following table gives the full list

of possible parameters for the Driver State Device along with their default values.

Each of these parameters must be specified as a child element of the form

<param key="device.driverstate.<PARAMETER NAME>" value="<PARAMETER VALUE>" of the

appropriate demand definition element (e.g. <vehicle ... />, <vType ... />, or <flow ... />). See Modeling of Perception

Errors for details of the

error dynamics.

| Parameter | Type | Default | Description |

|---|---|---|---|

| initialAwareness | float | 1.0 | The initial awareness assigned to the driver state. At awareness 1, the device does not produce any perception errors! |

| errorTimeScaleCoefficient | float | 100.0 | Time scale constant that controls the time scale of the underlying error process. |

| errorNoiseIntensityCoefficient | float | 0.2 | Noise intensity constant that controls the noise intensity of the underlying error process. |

| speedDifferenceErrorCoefficient | float | 0.15 | Scaling coefficient for the error applied to the speed difference input of the car-following model. |

| headwayErrorCoefficient | float | 0.75 | Scaling coefficient for the error applied to the distance input of the car-following model. |

| freeSpeedErrorCoefficient | float | 0.0 | Scaling coefficient for the error applied to free driving speed |

| speedDifferenceChangePerceptionThreshold | float | 0.1 | Constant controlling the threshold for the perception of changes in the speed difference |

| headwayChangePerceptionThreshold | float | 0.1 | Constant controlling the threshold for the perception of changes in the distance input. |

| minAwareness | float | 0.1 | The minimal value for the driver awareness (a technical parameter to avoid a blow up of the term 1/minAwareness). |

| maximalReactionTime | float (s) | original action step length | The value for the driver's actionStepLength attained at minimal awareness. The actionStepLength scales linearly between this and the original value with the awareness between minAwareness and 1.0. |

Modeling of Perception Errors#

An underlying Ornstein-Uhlenbeck process drives the errors which are applied to the inputs of the car-following model perception. The characteristic time scale and driving noise intensity of the process are determined by the driver state awareness, which is meant to function as an interface between the traffic situation and the driver state dynamics. We have

errorTimeScale = errorTimeScaleCoefficient*awareness(t)errorNoiseIntensity = errorNoiseIntensityCoefficient*(1.-awareness(t))

The error's state error(t) at time t is scaled and added to input

parameters of the car-following model as described below.

Leader speed difference and gap errors#

perceivedSpeedDifference = trueSpeedDifference + speedDifferenceErrorCoefficient * headway(t) * error(t)perceivedHeadway = trueHeadway + headwayErrorCoefficient * headway(t) * error(t)

Note that the state error(t) of the error process is not directly

scaled with the awareness, which only controls the errors indirectly by

affecting the processes parameters. Further, the scale of the perception

error is assumed to grow linearly with the distance to the perceived

object.

Finally, the driver state induces an update of the input to the car-following model only if the perceived values have changed to a sufficient degree. The conditions for updating the car-following input are:

- headway:

|perceivedHeadway - expectedHeadway| > headwayChangePerceptionThreshold*trueGap*(1.0-awareness) - speed difference:

|perceivedSpeedDifference - expectedSpeedDifference| > speedDifferenceChangePerceptionThreshold*trueGap*(1.0-awareness)

Here, the expected quantities are

expectedHeadway = lastRecognizedHeadway - expectedSpeedDifference*elapsedTimeSinceLastRecognitionexpectedSpeedDifference = lastRecognizedSpeedDifference

Free flow error#

The freeSpeedErrorCofficient can be used to model imprecision in the perception of the drivers own speed and thereby induce fluctuations in free flow speed.

The error model is:

pereivedSpeed = speed + myFreeSpeedErrorCoefficient * error(t) * sqrt(speed)

Supported carFollowModels#

All models support the driverstate effect of freeSpeedError.

The following models support the driverstate effects speedDifferenceError and headywayError:

- Krauss

- IDM

- CACC

- ACC

Note

The models CACC and ACC only apply driverstate device effects if parameter vType-attribute applyDriverState="1" is defined (any number other than 0 works).

Publication#

See sectino 2.3.1.3 Modelling of a Decreased Post-ToC Driver Performance in TransAID project report: Modelling, simulation and assessment of vehicle automations and automated vehicles’ driver behaviour in mixed traffic